ArXiv

Paper

GitHub

Source Code

Colab

Notebook Demo

Slides:

Our Approach

COLM Poster

Bonus Paper:

Vector Arithmetic

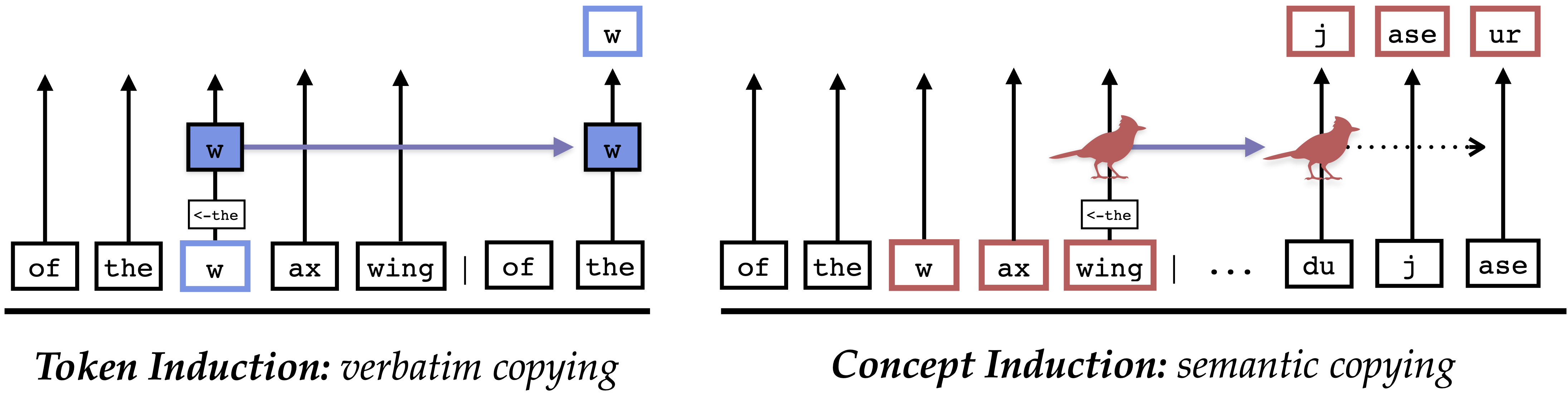

There are two ways to copy text...

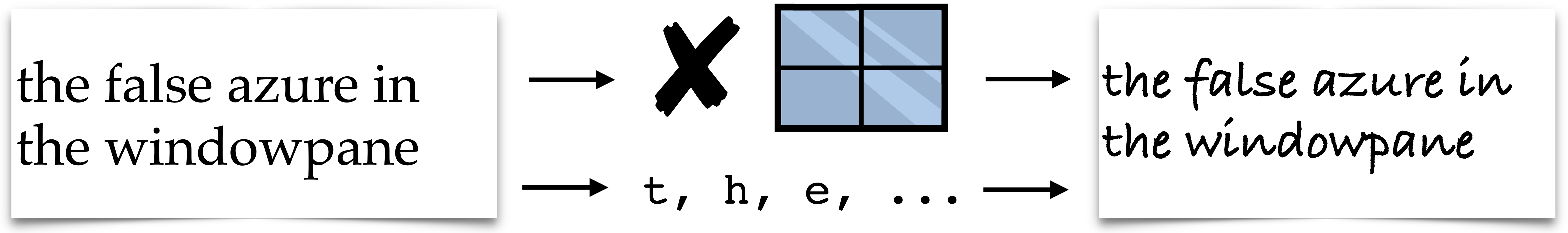

Imagine you are asked to copy a sentence onto a fresh sheet of paper. There are two ways of copying: you could transfer every individual letter (t, h, e, _...), or you could leverage your familiarity with English to copy a few words at a time. For example, if you already know how to write "windowpane," you don't need to individually copy each character: you can just write windowpane.

Inspiration: How do Humans Read?

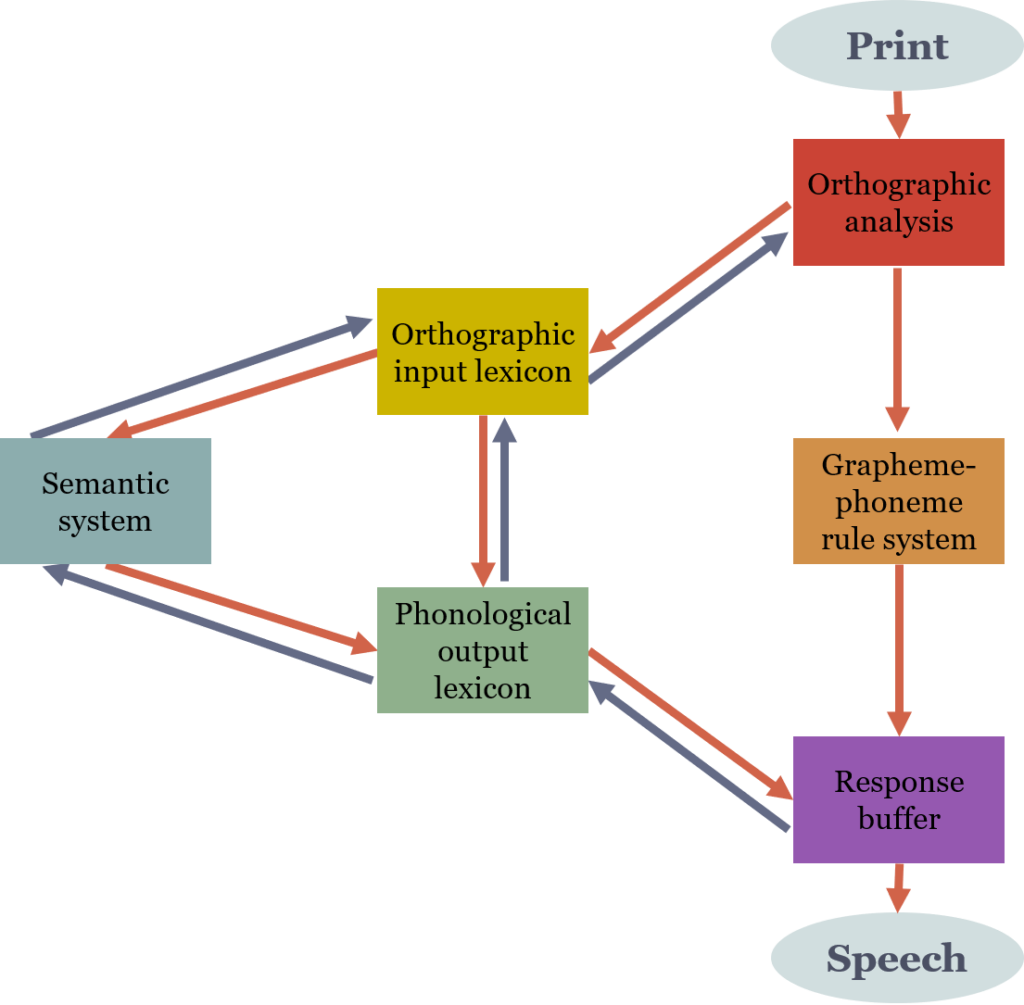

Psychologists have developed a dual-route model of reading, which describes two ways that humans can read text (see this textbook section and Wikipedia). If readers are looking at a word they already know, they can read it via a lexical pathway that processes whole words at a time, as if they are looking up those words in a dictionary. On the other hand, if readers come across a word they do not know, they must use the sub-lexical route, which decodes individual graphemes into phonemes based on the rules of that language.

The existence of a condition called deep dyslexia provides support for this model. After an accident or stroke, people with deep dyslexia can still read and understand word meanings, but often make semantic errors when reading words aloud. For example, someone with deep dyslexia might read the word CANARY as "parrot", or BUCKET as "pail." The first documented case of deep dyslexia came from Marshall and Newcombe (1966), and was later complemented by the discovery of the opposite condition, surface dyslexia, an inability to read without first "sounding out" words (Marshall and Newcombe, 1973).

Our Work: Dual-Route Induction

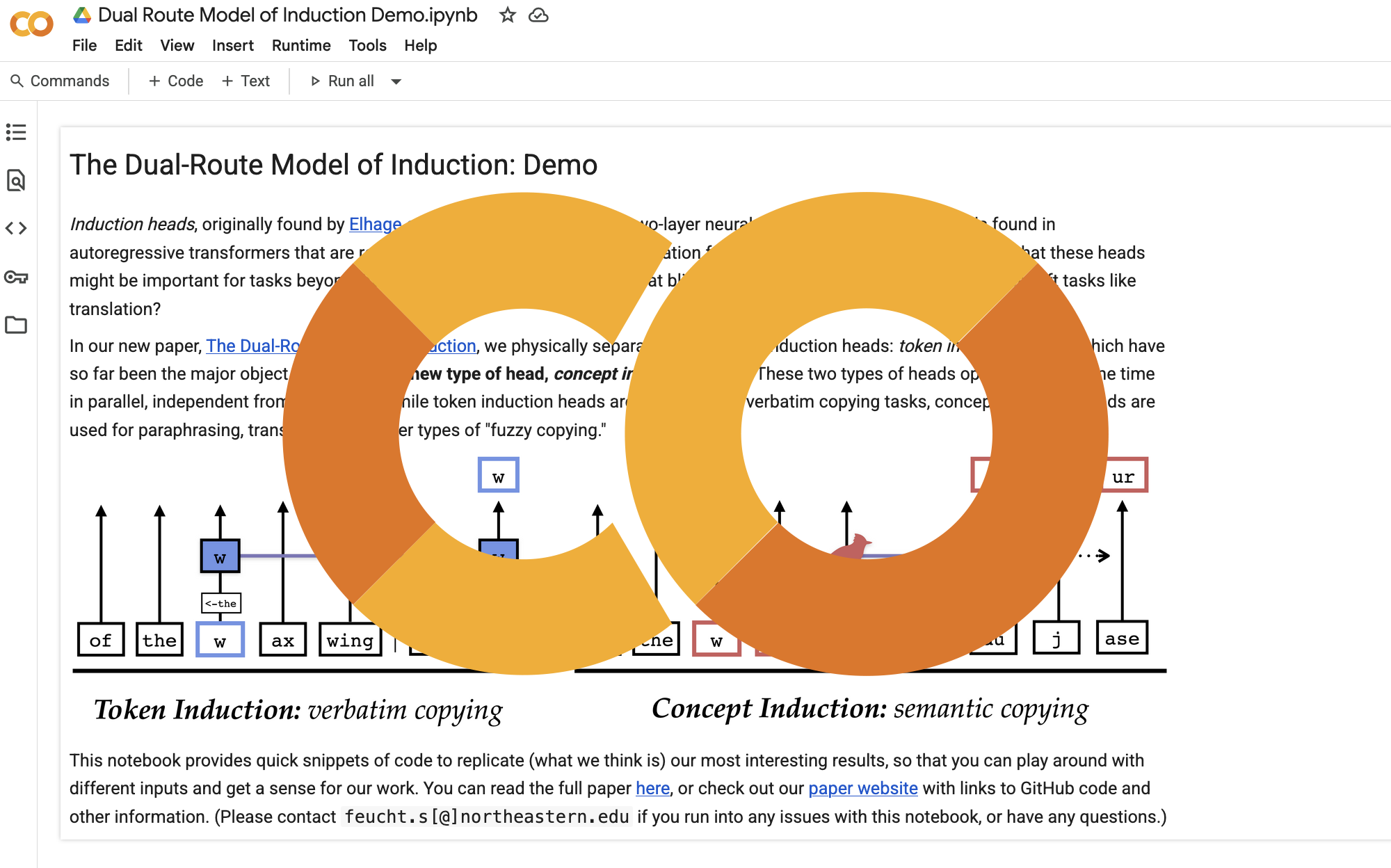

Inspired by this understanding of human reading, we posit a dual-route model of induction. LLMs can either copy text using token-level induction heads (Elhage et al., 2021; Olsson et al., 2022), or using concept-level induction heads, which copy meaningful representations of entire words. In this paper, we identify concept induction heads in four open-source models, and show that they handle word meanings, which also makes them useful for tasks like translating a word between two languages.

How To Find These Heads?

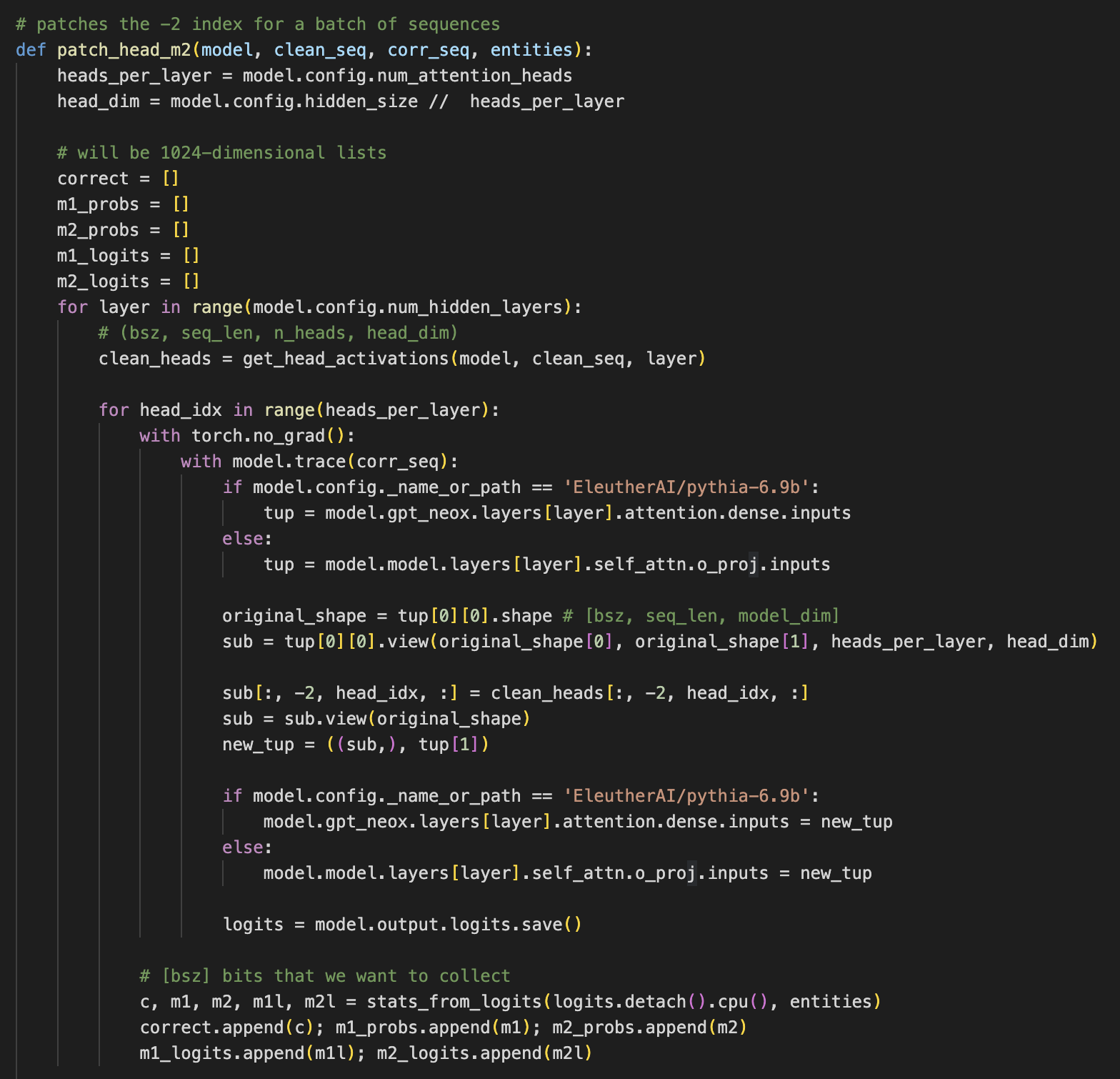

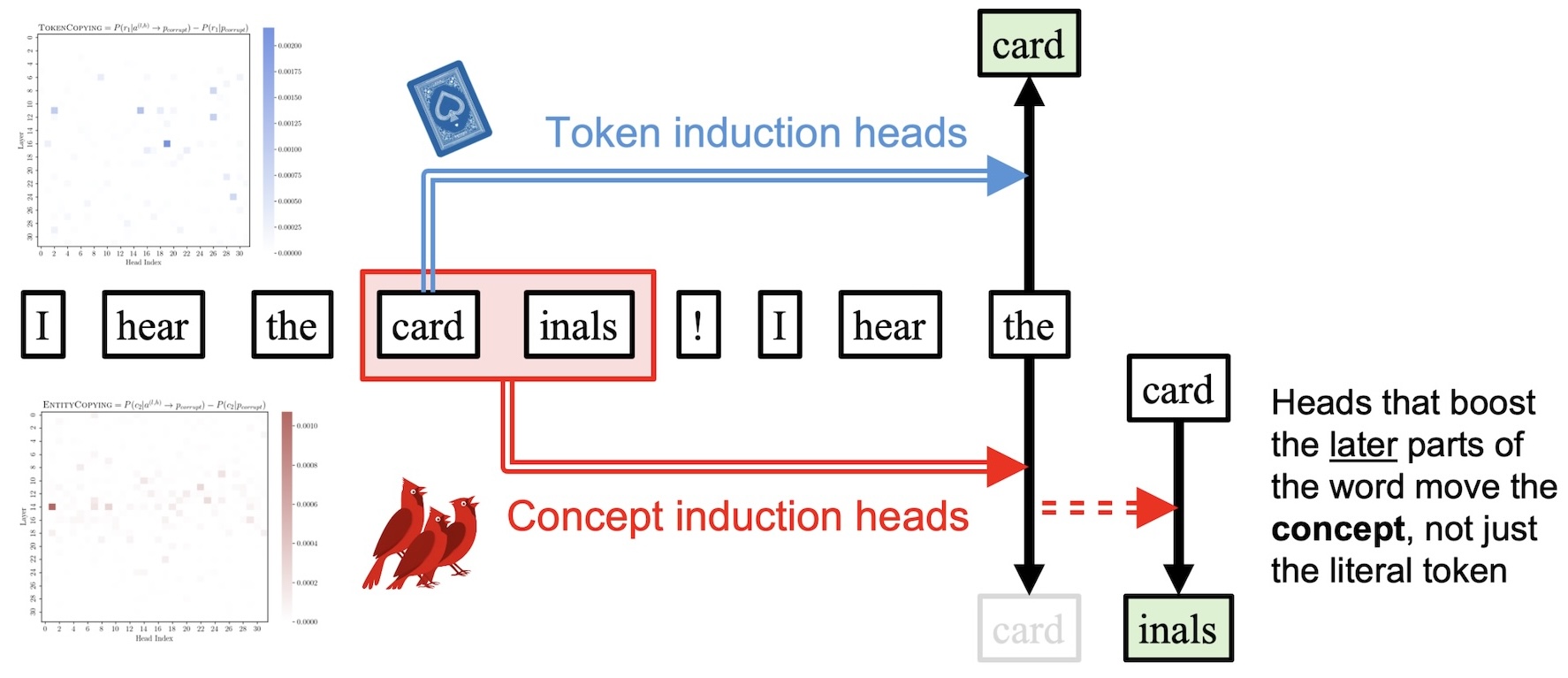

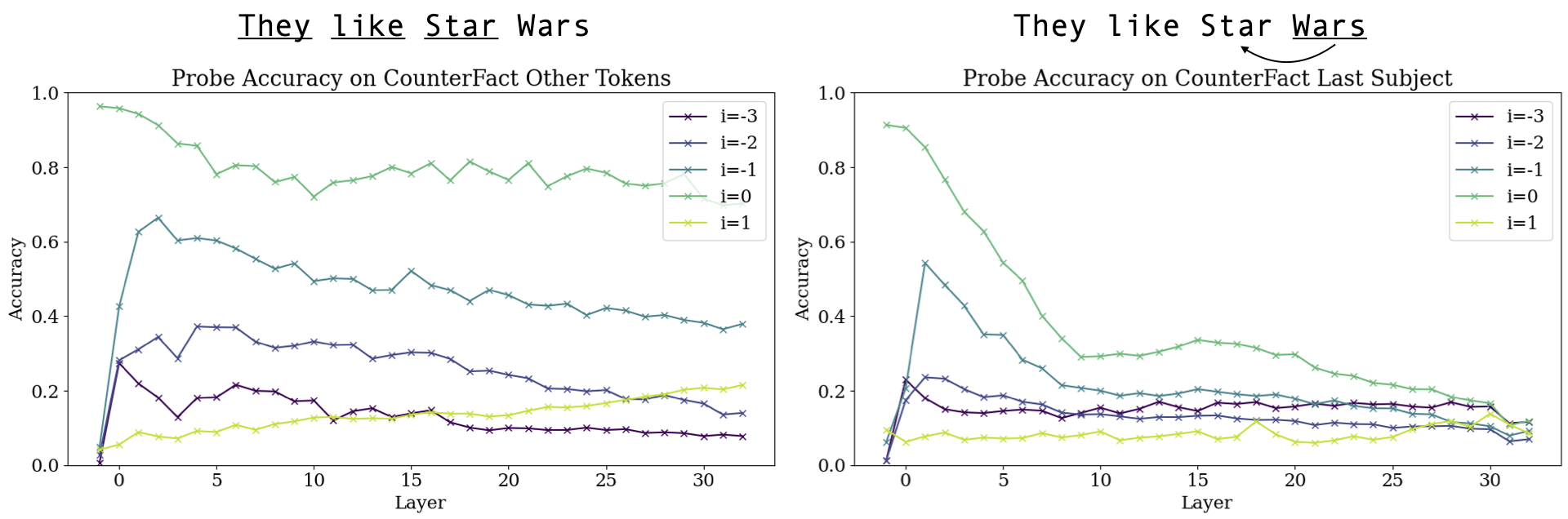

Many words (like "cardinals", shown below) are chopped into nonsensical bits by tokenizers, meaning that models must learn that card and inals actually map to one specific meaning. This process is known as "detokenization" (Elhage et al., 2022, Gurnee et al., 2023). But if the model wants to copy the word "cardinals," it has to convert that latent representation back into the tokens card and inals. We hypothesize that if an attention head is copying over the meaning of one of these multi-token words, it should have information about all tokens in that word. That means it should increase the probability of future tokens when patched into a new context, not just the next token. So, if we patch an attention head into a new sequence and it increases the probability of the next copied token, we know it is doing some type of copying. But it if also increases the probability of the token after that, then we hypothesize that it is carrying the entire detokenized word representation.

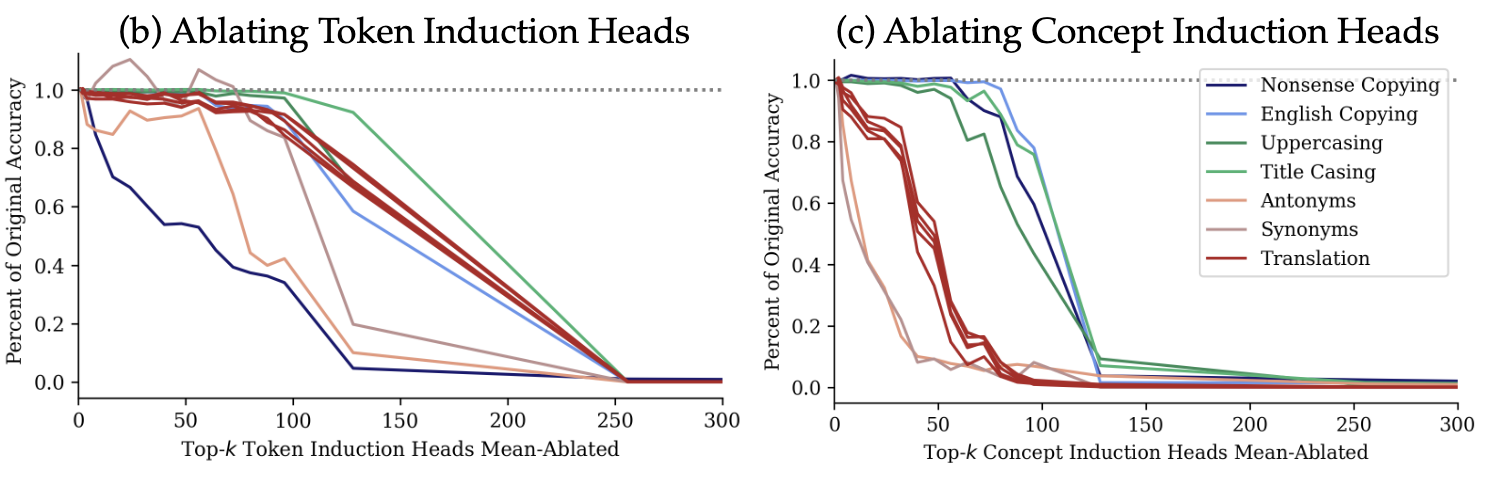

Ablating Concept and Token Heads

After identifying token and concept induction heads via causal intervention (see Section 2 of our paper), we test these heads on a new in-context task that requires models to copy word meanings. For example, we give an LLM a list of ten words in French (1. neige, 2. pomme, ... 9. froid, 10. mort) and prompt the model to output the translation of the last word in English (... 9. cold, 10. ___). We also set up tasks where the model has to copy exactly from English -> English.

We find that ablating either set of heads has little impact on Llama-2-7b's ability to copy English words, which makes sense: as our intro example demonstrates, you can copy windowpane -> windowpane whether or not you understand the meaning of that word. Because either mechanism can be used to do this task, we think of these two types of heads as working in parallel.

Ablating Token Induction Heads Causes Paraphrasing

When token induction heads are ablated, we find that LLMs start to paraphrase where they would have otherwise done exact copying. We can think of this as "giving the model deep dyslexia"—it is still able to understand semantics, but is no longer able to access exact token information. Much of this rephrasing seems to be on a phrase level, although we do see specific words being replaced with synonyms (e.g., cases is replaced by times). Section 4.2 and Appendix D.4 show examples of this, and you can also download a notebook to generate paraphrases yourself on GitHub.foo = []

for i in range(len(bar)):

if i % 2 == 0:

foo.append(bar[i])

foo = []

for i in range(len(bar)):

if i % 2 == 0:

foo.append(bar[i])

foo = []

for i in range(len(bar)):

if i % 2 == 0:

foo.append(bar[i])

foo = [bar[i] for i in range(

len(bar)) if i % 2 == 0]

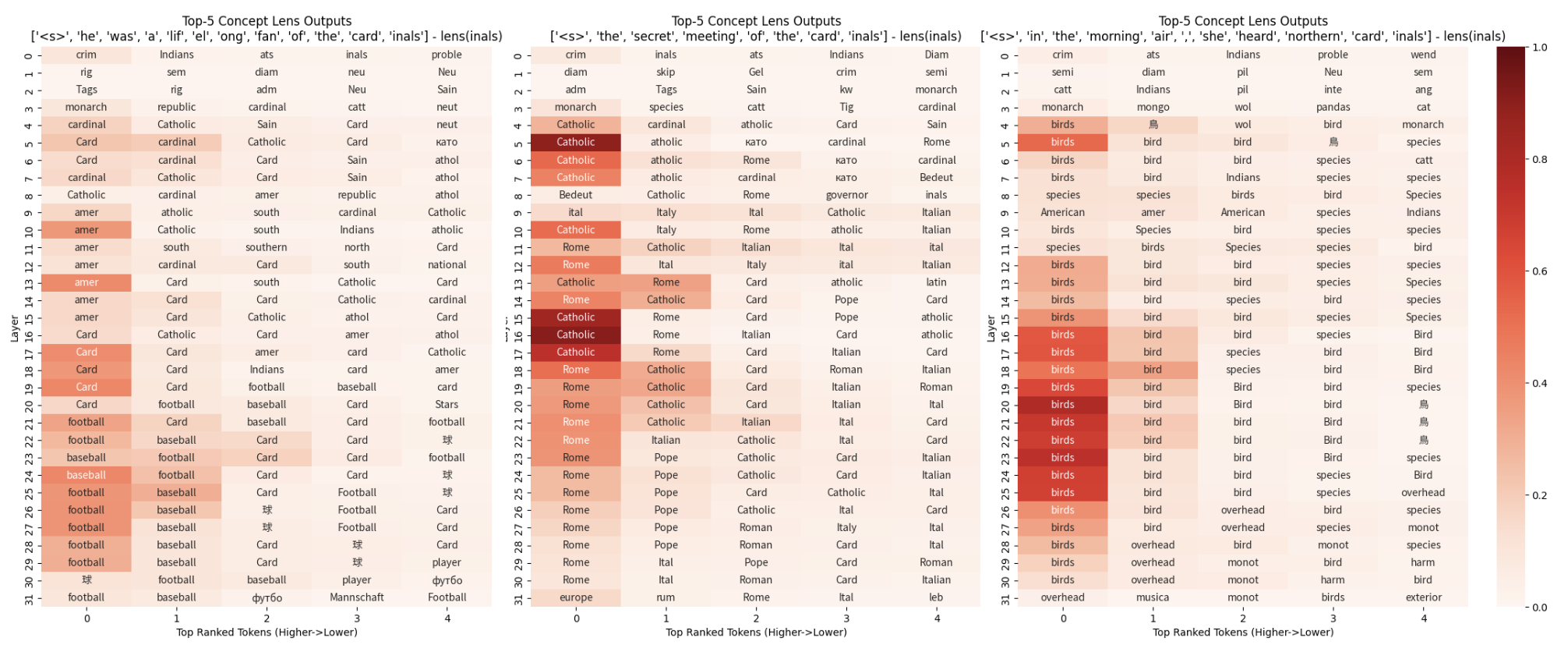

Concept Lens: What Are These Heads Looking At?

In this paper, we show that concept induction heads carry meaningful word representations. That means we can use the weights of these attention heads to develop a "concept lens" that reveals the semantics of arbitrary hidden states in a model. By combining the output and value projections of the top-k concept induction heads, we can obtain a single matrix that extracts semantic information from any hidden state in our model. For example, if we apply this matrix to a hidden state and project it to token space using logit lens (nostalgebraist, 2020), we can see very different semantics encoded in the representation of "cardinals" (sports, Catholicism, or birds) depending on the prompt that the word is found in.

Concept Induction Heads Output Semantic Representations

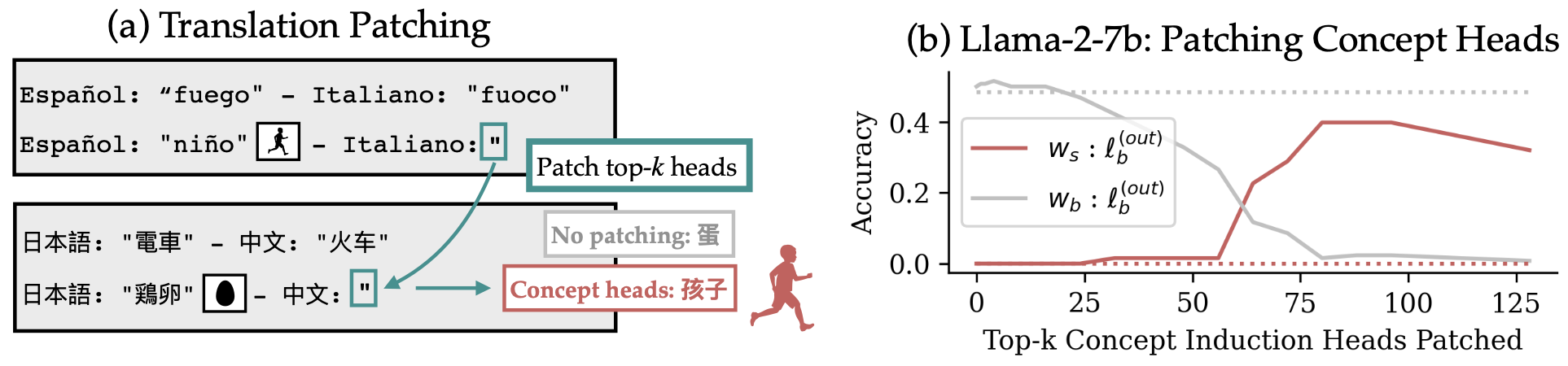

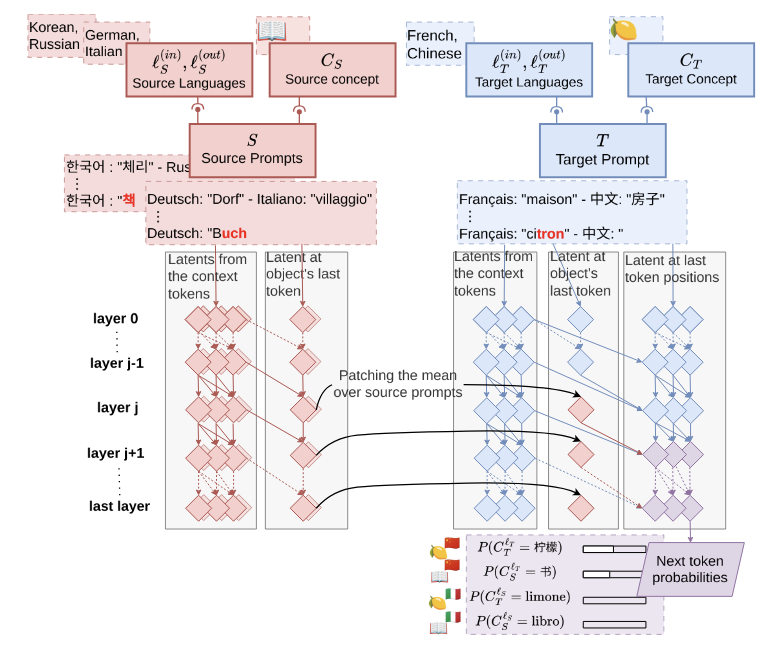

Our experiments show that concept induction heads are important for translation. Building on results from Dumas et al. (2025) showing that LLMs represent word meanings separate from language, we replicate their experiment in a more surgical way using these newly-found heads.

Related Work

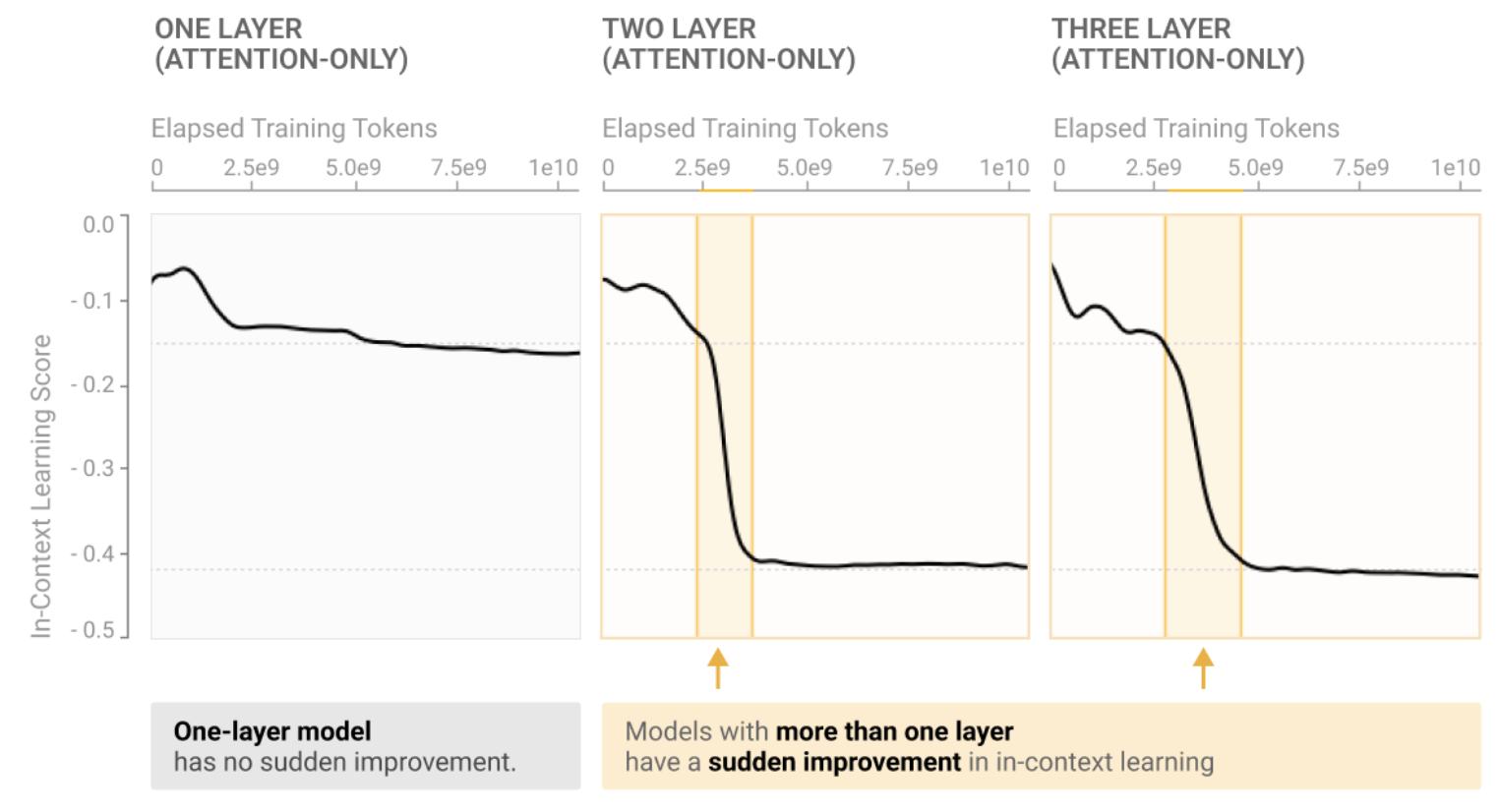

Catherine Olsson, Nelson Elhage, Neel Nanda, Nicholas Joseph, ... Chris Olah. In-Context Learning and Induction Heads. 2022.

Catherine Olsson, Nelson Elhage, Neel Nanda, Nicholas Joseph, ... Chris Olah. In-Context Learning and Induction Heads. 2022.

Notes: Based on the initial discovery of induction heads in Elhage et al. (2022), the authors investigate the connection between in-context learning and induction heads. They find that ICL capabilities are closely related to the development of induction heads.

Clément Dumas, Chris Wendler, Veniamin Veselovsky, Giovanni Monea, Robert West. Separating Tongue from Thought: Activation Patching Reveals Language-Agnostic Concept Representations in Transformers. 2025.

Clément Dumas, Chris Wendler, Veniamin Veselovsky, Giovanni Monea, Robert West. Separating Tongue from Thought: Activation Patching Reveals Language-Agnostic Concept Representations in Transformers. 2025.

Notes: We build off the work of Dumas et al. (2025) in the second half of this paper. They show that the average of a representation for the same word in multiple languages (e.g., "buch", "libro", "book") can be patched into a new context and decoded in an entirely different language (e.g. 书). We repeat their experiments, except instead of patching averaged hidden states, we patch the outputs of concept induction heads. We see the same effect, suggesting that these heads are responsible for transporting language-agnostic word representations.

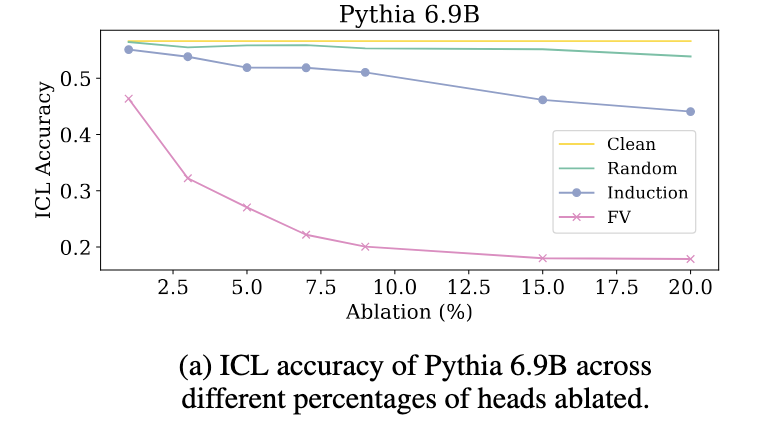

Kayo Yin, Jacob Steinhardt. Which Attention Heads Matter for In-Context Learning? 2025.

Kayo Yin, Jacob Steinhardt. Which Attention Heads Matter for In-Context Learning? 2025.

Notes: The authors compare token induction heads to function vector heads, finding that FV heads are more important for ICL than token induction heads. Our work sheds light on why this might be: token induction heads, used for verbatim copying, are likely not as useful for ICL tasks as FV and concept induction heads are.

Sheridan Feucht, David Atkinson, Byron Wallace, David Bau. Token Erasure as a Footprint of Implicit Vocabulary Items in LLMs. 2024.

Sheridan Feucht, David Atkinson, Byron Wallace, David Bau. Token Erasure as a Footprint of Implicit Vocabulary Items in LLMs. 2024.

Notes: Our own prior work showed that the last tokens of multi-token words/entities strangely "erase" or lose previous-token information in early layers. We suspected that this is due to the process of detokenization, or conversion from a model's literal token vocabulary to its implicit internal vocabulary (also described by Kaplan et al. (2024)). Concept induction heads seem to operate over these same implicit vocabulary units.

How to cite

This work was accepted at COLM 2025. It can be cited as follows:

bibliography

Sheridan Feucht, Eric Todd, Byron Wallace, and David Bau. "The Dual-Route Model of Induction." Second Conference on Language Modeling, arXiv:2504.03022 (2025).

bibtex

@inproceedings{

feucht2025dualroute,

title={The Dual-Route Model of Induction},

author={Sheridan Feucht and Eric Todd and Byron Wallace and David Bau},

booktitle={Second Conference on Language Modeling},

year={2025},

url={https://arxiv.org/abs/2504.03022}

}